Artificial intelligence (AI) is a loosely defined term that refers to machines (ie, algorithms) simulating facets of human intelligence. Some examples of AI are seen in natural language-processing algorithms, including autocorrect and search engine autocomplete functions; voice recognition in virtual assistants; autopilot systems in airplanes and self-driving cars; and computer vision in image and object recognition. Since the dawn of the century, various forms of AI have been tested and introduced in health care. However, a gap exists between clinician viewpoints on AI and the engineering world’s assumptions of what can be automated in medicine.

In this article, we review the history and evolution of AI in medicine, focusing on radiology and dermatology; current capabilities of AI; challenges to clinical integration; and future directions. Our aim is to provide realistic expectations of current technologies in solving complex problems and to empower dermatologists in planning for a future that likely includes various forms of AI.

Early Stages of AI in Medical Decision-making

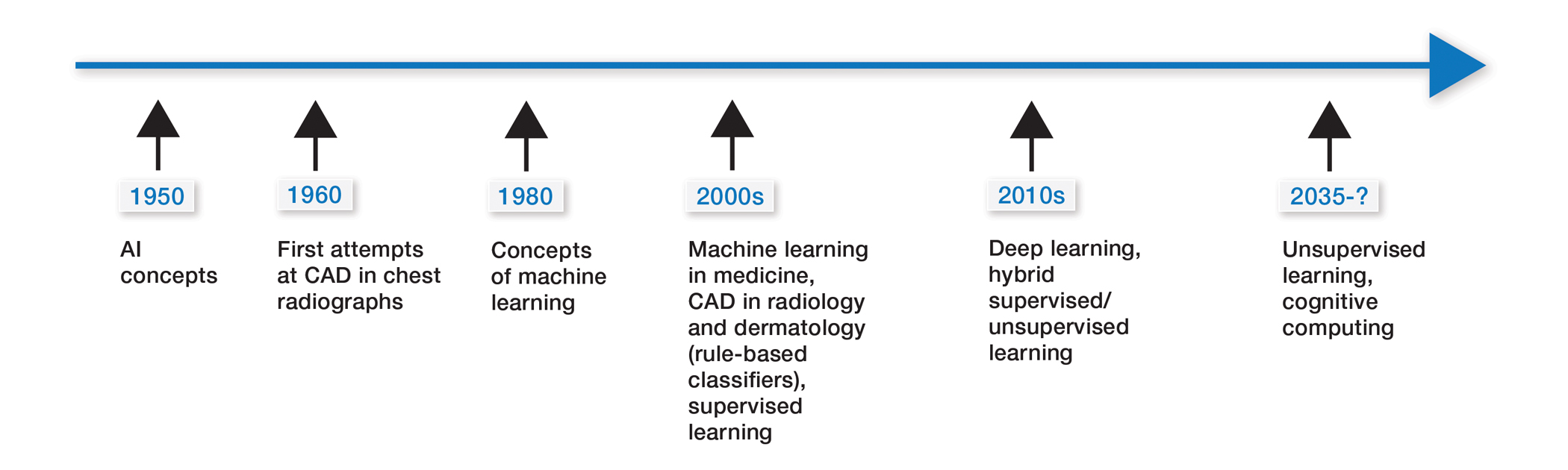

Some of the earliest forms of clinical decision-support software in medicine were computer-aided detection and computer-aided diagnosis (CAD) used in screening for breast and lung cancer on mammography and computed tomography.1-3 Early research on the use of CAD systems in radiology date to the 1960s (Figure), with the first US Food and Drug Administration–approved CAD system in mammography in 1998 and for Centers for Medicare & Medicaid Services reimbursement in 2002.1,2

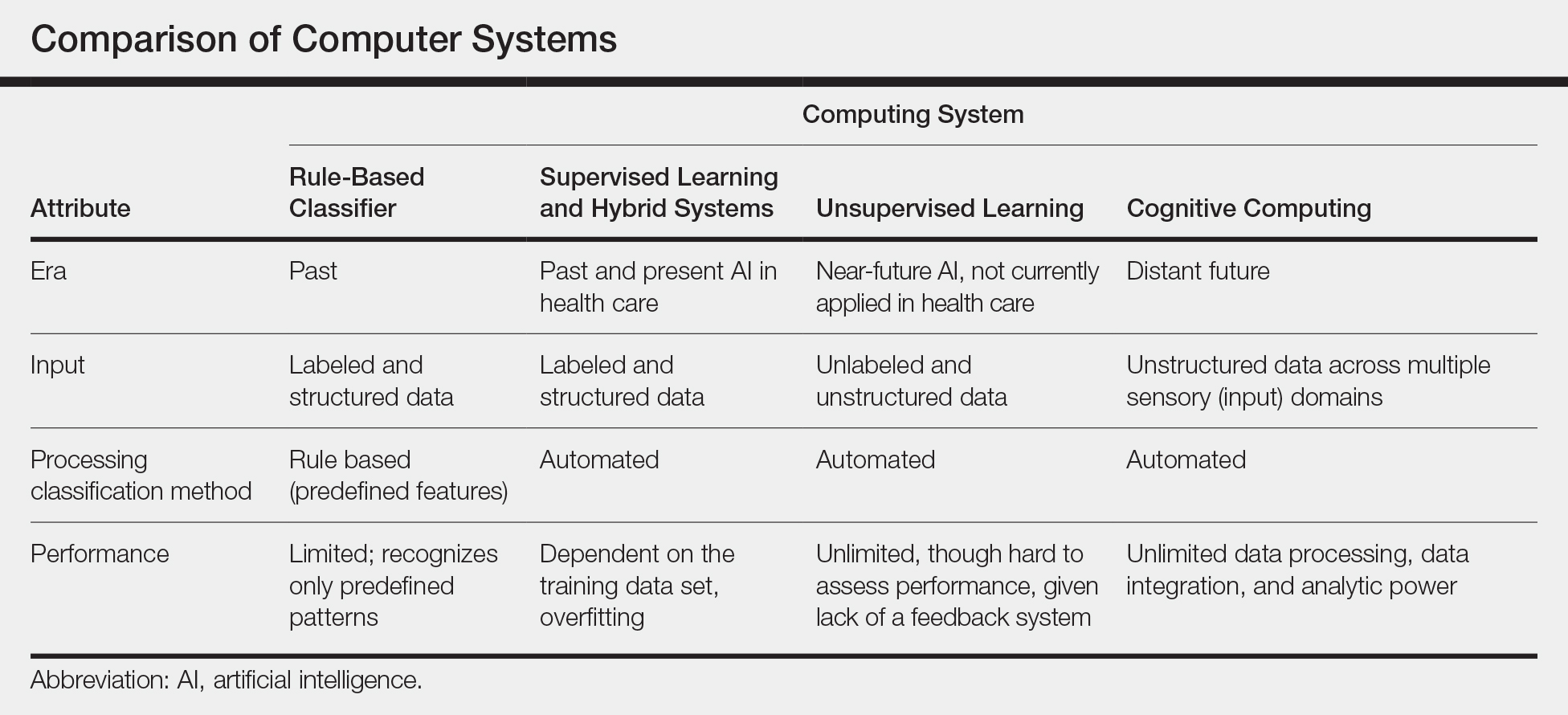

Early CAD systems relied on rule-based classifiers, which use predefined features to classify images into desired categories. For example, to classify an image as a high-risk or benign mass, features such as contour and texture had to be explicitly defined. Although these systems showed on par with, or higher, accuracy vs a radiologist in validation studies, early CAD systems never achieved wide adoption because of an increased rate of false positives as well as added work burden on a radiologist, who had to silence overcalling by the software.1,2,4,5

Computer-aided diagnosis–based melanoma diagnosis was introduced in early 2000 in dermatology (Figure) using the same feature-based classifiers. These systems claimed expert-level accuracy in proof-of-concept studies and prospective uncontrolled trials on proprietary devices using these classifiers.6,7 Similar to radiology, however, real-world adoption did not happen; in fact, the last of these devices was taken off the market in 2017. A recent meta-analysis of studies using CAD-based melanoma diagnosis point to study bias; data overfitting; and lack of large controlled, prospective trials as possible reasons why results could not be replicated in a clinical setting.8

Beyond 2010: Deep Learning

New techniques in machine learning (ML), called deep learning, began to emerge after 2010 (Figure). In deep learning, instead of directing the computer to look for certain discriminative features, the machine learns those features from the large amount of data without being explicitly programed to do so. In other words, compared to predecessor forms of computing, there is less human supervision in the learning process (Table). The concept of ML has existed since the 1980s. The field saw exponential growth in the last decade with the improvement of algorithms; an increase in computing power; and emergence of large training data sets, such as open-source platforms on the Web.9,10

Most ML methods today incorporate artificial neural networks (ANN), computer programs that imitate the architecture of biological neural networks and form dynamically changing systems that improve with continuous data exposure. The performance of an ANN is dependent on the number and architecture of its neural layers and (similar to CAD systems) the size, quality, and generalizability of the training data set.9-12